In part four of our ongoing series “Advancing Continuing Medical Education: Reflecting on the Past and Charting a Path Forward,” we shift focus to outcomes measurement as an integrated part of the educational design, identifying the current state of outcomes reporting and looking to the future. Over the past decade, outcomes measurement has become an integral part of the design of most continuing medical education (CME) initiatives. As a community, there is now greater sophistication in abilities to gather, analyze and report outcomes data; however, there is wide variation in the types and formats of outcomes data generated and reported.

In an effort to understand current views of stakeholders within the CME community related to outcomes design and reporting, we conducted a survey between April and May 2023. The survey questions aimed to delve into the stakeholders' views on the present state of outcomes reporting, awareness of outcomes standardization efforts, and perceived issues with outcomes reporting. The initial survey data was reported at the Alliance Industry Summit meeting on May 2, 2023. The survey remained open for two weeks following the presentation to gather additional responses.

A total of 70 responses were collected, representing a diverse range of CME stakeholders. Of the responses received, 32 were from individuals from pharmaceutical companies supporting independent medical education (IME) grants (IME supporters), while the remaining 38 responses came from professionals associated with educational providers, professional societies or associations, academic medicine or medical schools, and those providing CME-related consulting or services (IME provider/collaborator).

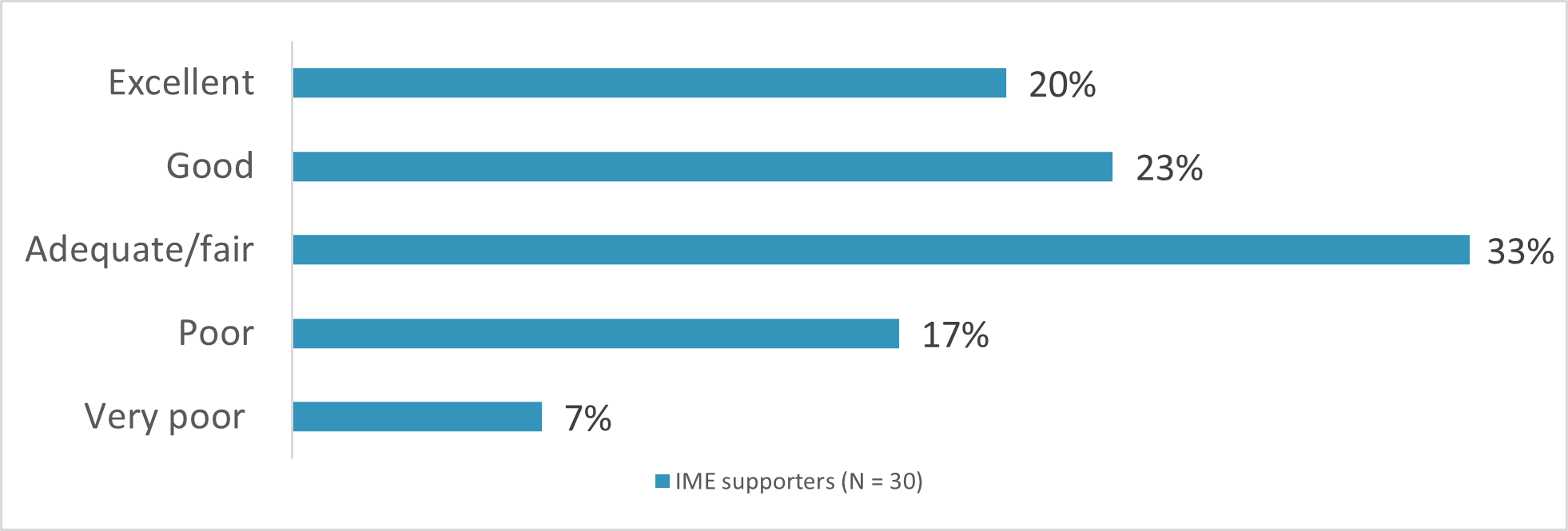

Based on the survey results, how are we doing as a community in reporting outcomes? Two-thirds of the IME supporters perceive that outcomes reports have generally improved over the past five years. While they perceive the overall quality has improved, they report that, on average, almost one-quarter of the reports received are of poor quality (Figure 1).

Figure 1: Question to IME Supporters: Of the outcomes reports that you receive, what percent of the reports are:

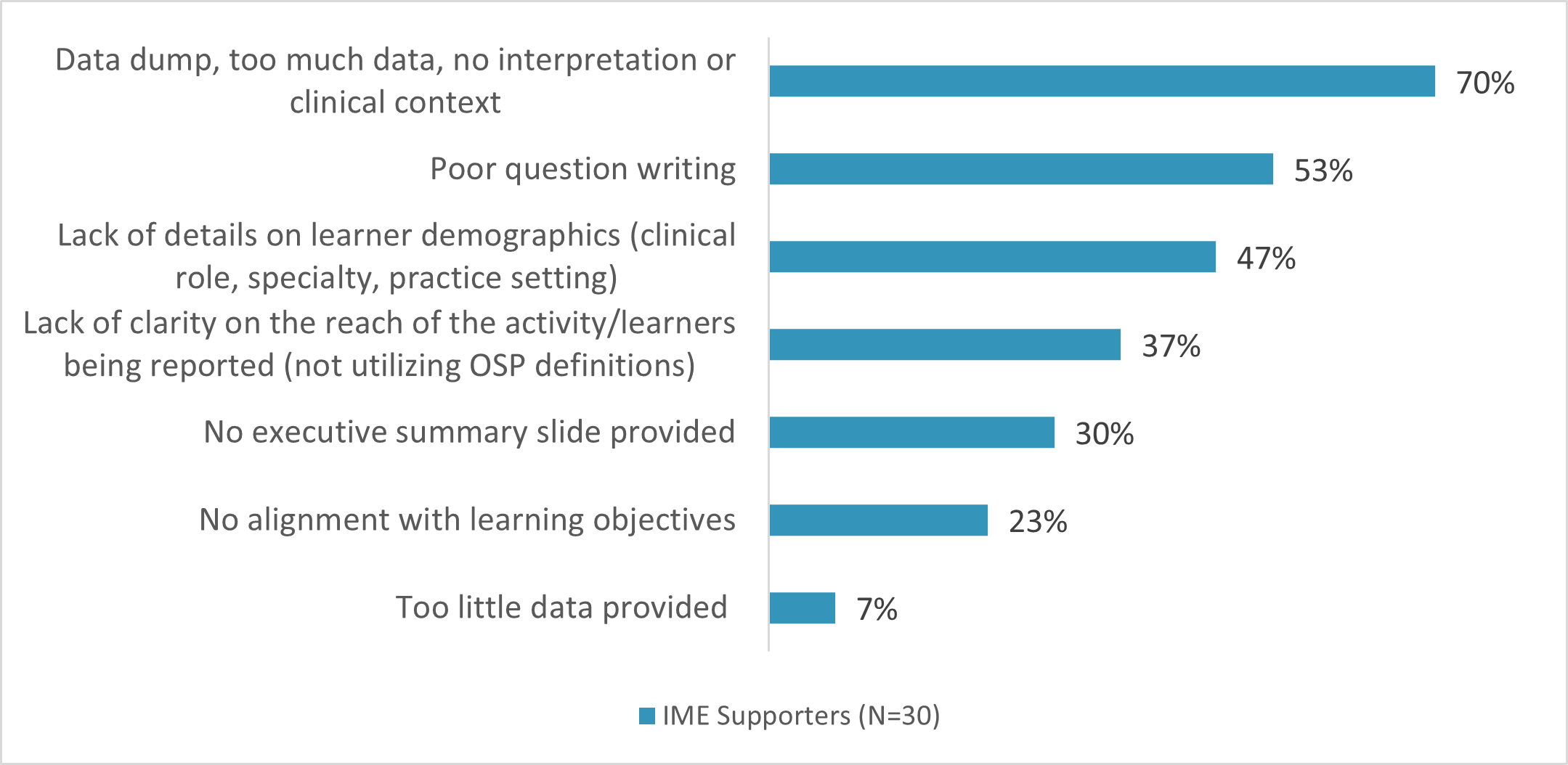

The survey sought to understand the biggest issues that IME supporters see with current outcomes reports. Over two-thirds of IME supporters reported that “data dump, too much data without interpretation of clinical context” was an issue in the reports they receive, while over half reported “poor question writing” as a problematic issue (Figure 2). Further issues that came to the top were related to the reporting of learner participation and demographic details.

In conjunction with the selection of up to three options from a multiple-choice question, IME supporters had the opportunity to provide open-response commentary on the most problematic issues with outcomes reports. One IME supported stated, “I would have selected all of the above (as) problematic issues. Most of it is driven by poor planning and execution, along with a push by many organizations to generalize a cross-supporter plan/outcomes/template, etc. …" Another IME supporter conveyed that there is an overreliance on learner demographics and not enough focus on more meaningful outcomes: “Demographics and change in question answer before and after activity is not enough anymore. [We] need to see practice changing outcomes (chart pulls, publications, etc.).” Additionally, an IME supporter noted, “Often, outcomes use too much med-ed speak and have very busy slides. The information is good and interesting for me, but in order to share with my colleagues, I often need to recreate the information into a tighter narrative to try to tell a story … I am always asked to show the impact of med ed.”

Figure 2: Question to IME Supporters: Which of the following are the most problematic issues that you face with the outcomes reports you currently receive? (select up to three)

Efforts to standardize outcomes terminology have taken place over the past five years, including the Outcomes Standardization Project and Alliance Taxonomy Taskforce. The Outcomes Standardization Project (OSP) yielded a robust set of definitions that is currently available at Outcomes in CE — The Standardization Project. The survey sought to understand the adoption of the OSP definitions among both the IME supporter and the educational provider/collaborator communities. Of the IME supporters, 60% are utilizing the OSP definitions, while 20% are using their own definitions and 20% are not utilizing any definitions. There was slightly higher adoption among the IME provider/collaborator community, with 70% reporting they utilize the definitions, while 21% have their own definitions that they utilize in reporting outcomes.

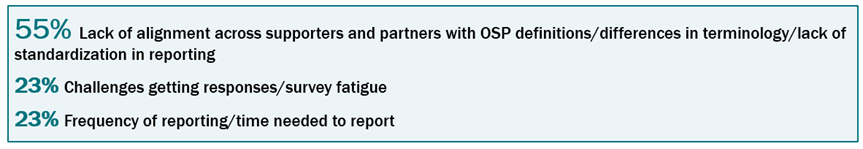

Additional questions specifically targeted to the educational provider/collaborator community were developed to understand perspectives and challenges related to outcomes reporting. The largest challenge reported by just over half is the lack of alignment across supporters and partners with OSP definitions/differences in terminology or a lack of standardization in reporting (Figure 3).

Comments were gathered and provide additional context to the challenges and frustrations that are apparent with a lack of standardization to educational providers/collaborators. One comment focused on the need for adoption of the OSP definitions: “We need more transparency around data and more support/adoption of OSP by supporters.* It can't just be providers trying to raise the bar or they do so at their own competitive disadvantage." Another provider/collaborator concluded: “It is very hard to standardize our outcomes reports when supporters want different pieces of information highlighted.” A third provider/collaborator reiterated, “There needs to be a unified approach to reporting on the basics (e.g., a standard form tasking providers with comparing their proposed outcomes from the grant to the actual results) in three slides.”

Figure 3: Question to Educational Provider/Collaborators: What are the biggest challenges you have with outcomes reporting? (open-ended, list top two)

The results from this survey highlight that while outcomes reporting has generally improved over the past 5 years, there are frustrations among both IME providers/collaborators and IME supporters over the current state of outcomes reporting.

This series explores the evolution of CME to best meet the needs of healthcare providers; in that evolution, we must consider the critical role that outcomes data plays in demonstrating the impact of CME efforts. As a united CME community of IME supporters, providers and collaborators, we must continue to push outcomes planning to be considered as part of the educational design, be integrated into the overall educational plan, and be reported in a manner that clearly conveys the impact on achieving educational aims. Outcomes data is a powerful tool to show how education is making a difference in enhancing clinician practice and improving patient health. We must continue to strive for improvements in how we articulate outcomes data to ensure data is being presented in a clear and concise manner to allow this impact to be recognized.

Emily Belcher is affiliated with CE Outcomes, LLC.

Wendy Cerenzia, MS, is affiliated with CE Outcomes, LLC.

Check out more from this series: Part 1, Part 2 and Part 3.