At the Alliance Annual Conference in January 2025, I was honored to lead a session more than five years in the making. The session introduced the critical need for a new perspective on assessment in continuing professional development (CPD) and continuing education (CE). While the session attendance was “standing room only”, the goal of this article is to share the lessons of my research with the widest possible audience in hopes that the broader community might benefit.

I have structured this article in alignment with the four critical sections of my #Alliance25 presentation. I hope you enjoy!

1. Remember Why Assessment in CPD/CE Is Critical

In CPD/CE, assessment is often viewed as a closing act — a measurement of what participants learned. Assessment, when properly understood, is not simply a rearview mirror. It is a core learning intervention, an opportunity to diagnose needs, spark reflection, refine educational design, segment audiences for tailored impact, and identify persistent gaps.

As such, to effectively leverage the assessment data we must answer at least five key questions:

- What were the baseline needs of our learners?

- How much impact did our education have?

- How can we adapt and improve our programs in real time?

- How do different subgroups of learners respond differently?

- Where does significant outstanding need remain?

Traditional assessments focus heavily on the second question — impact — sometimes to the exclusion of the other questions. In truth, great assessments shape learning just as much as they measure it. They challenge assumptions, surface uncertainty, and create moments for deeper cognitive processing — the very moments that drive long-term change. As Confucius suggested centuries ago, "Real knowledge is to know the extent of one's ignorance." CBA builds naturally into this broader, more ambitious view of why we assess in CPD/CE.

"Confidence-Based Assessment doesn’t just measure what learners know; it reveals how ready they are to act on it."

2. Limitations in Traditional Assessment

Despite our best intentions, the traditional multiple-choice question (MCQ) framework — ubiquitous across CPD/CE — carries structural weaknesses. Chief among them: guessing. When participants guess, assessment data becomes polluted. Even with four-choice and five-choice MCQs, learners who guess well can pass tests without truly mastering the content. This creates a false sense of success both for educators and learners.

Further, traditional MCQs reduce knowledge to a binary correct/incorrect construct, ignoring the richer reality of how learners feel about what they know. A learner who answers correctly but is filled with doubt is vastly different from one who answers correctly with full confidence. Yet traditional scoring collapses these two states into one. A learner who surfaces doubt in a pre-assessment will often have their knowledge and competence reinforced in CPD/CE — and in post-assessments while they will still be correct, their confidence in that knowledge and competence can dramatically rise.

Ultimately, our reliance on only measuring knowledge and competence leaves the most important impact unexplored — the cognitive, affective and metacognitive dimensions that more strongly predict real-world behavior change.

Table 1: Limitations of Traditional MCQ Assessment

|

Limitation

|

Impact

|

|

Guessing distorts scores

|

Inflated success rates, unreliable measurement

|

|

Binary outcomes (correct/incorrect)

|

Loss of learner self-awareness and nuance

|

|

Focus on immediate knowledge only

|

Weak predictors of real-world behavior change

|

|

Inflexibility for agile program design

|

Limits opportunities for rapid improvement

|

"Reducing guessing by 83% isn’t just a statistic — it's a clear signal that learners are truly mastering the material."

3. Introducing the ‘Gold Standard’ CBA Methodology

While there are many methodological approaches to assessing confidence, not all are created equal — and many provide little-to-no value. You might be familiar with a ubiquitous approach to asking confidence questions in an evaluation, for example: “After completing this activity, how confident are you in your understanding of diabetes management?” Research has clearly demonstrated that data from such untethered, self-reported statements are inconsistent and provide almost no value.

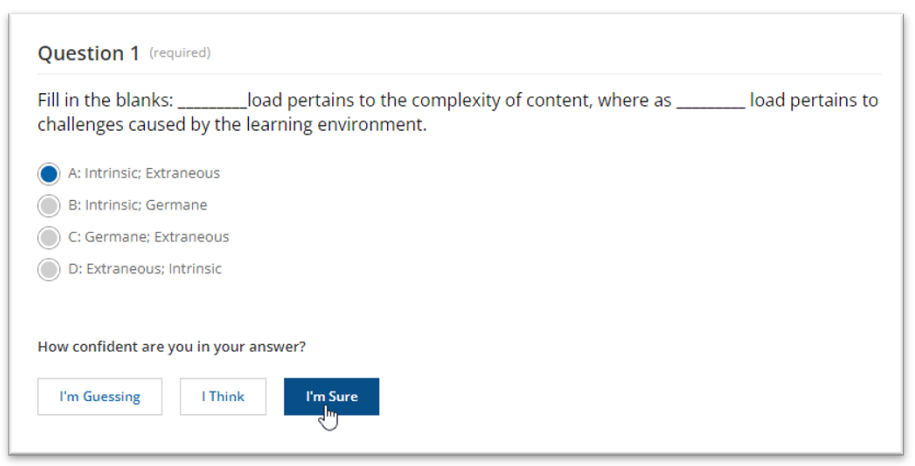

The ‘Gold Standard’ approach to CBA addresses these limitations by adding a second dimension to every knowledge question: the learner's confidence. Tightly coupling a confidence statement to a specific assessment question thereby mitigates the vague and general self-report of confidence typical in an evaluation. Instead of merely answering an MCQ, the learner also rates how certain they are in their response:

- Am I guessing?

- Do I think I know?

- Am I sure I know?

Here is how we present the CBA question design within the ArcheMedX Ready Learning Platform:

This seemingly simple addition to assessments transforms the test from a binary exercise into a multi-dimensional portrait of the learner's state. With CBA, each question response falls into one of five meaningful categories:

Table 2: CBA Response Categories

|

Category

|

Description

|

|

Mastery

|

Correct and confident

|

|

Doubt

|

Correct but uncertain

|

|

Guessing

|

Correct or incorrect but guessing

|

|

Uninformed

|

Incorrect but uncertain

|

|

Misinformed

|

Incorrect and confident

|

By diagnosing whether a learner is confidently correct, uncertain, misinformed or guessing, educators can tailor feedback and remediation better. Importantly, CBA builds metacognitive awareness — learners recognize not just what they know, but how sure they are. As a result, learners who indicated high confidence in their knowledge retained nearly four times more learned knowledge (vs. those with doubt) over time! We must embrace that the goal of CPD/CE is to enhance Mastery and mitigate “error-gance”.

"In the gap between knowledge and behavior change, CBA is our bridge."

4. Lessons Learned From Analyzing 10,000,000 CBA Responses

Over the past five years, we at ArcheMedX have collected and analyzed more than 10 million learner responses using the ‘Gold Standard’ CBA methodology. This unprecedented dataset reveals powerful insights about how CBA transforms both assessment and learning.

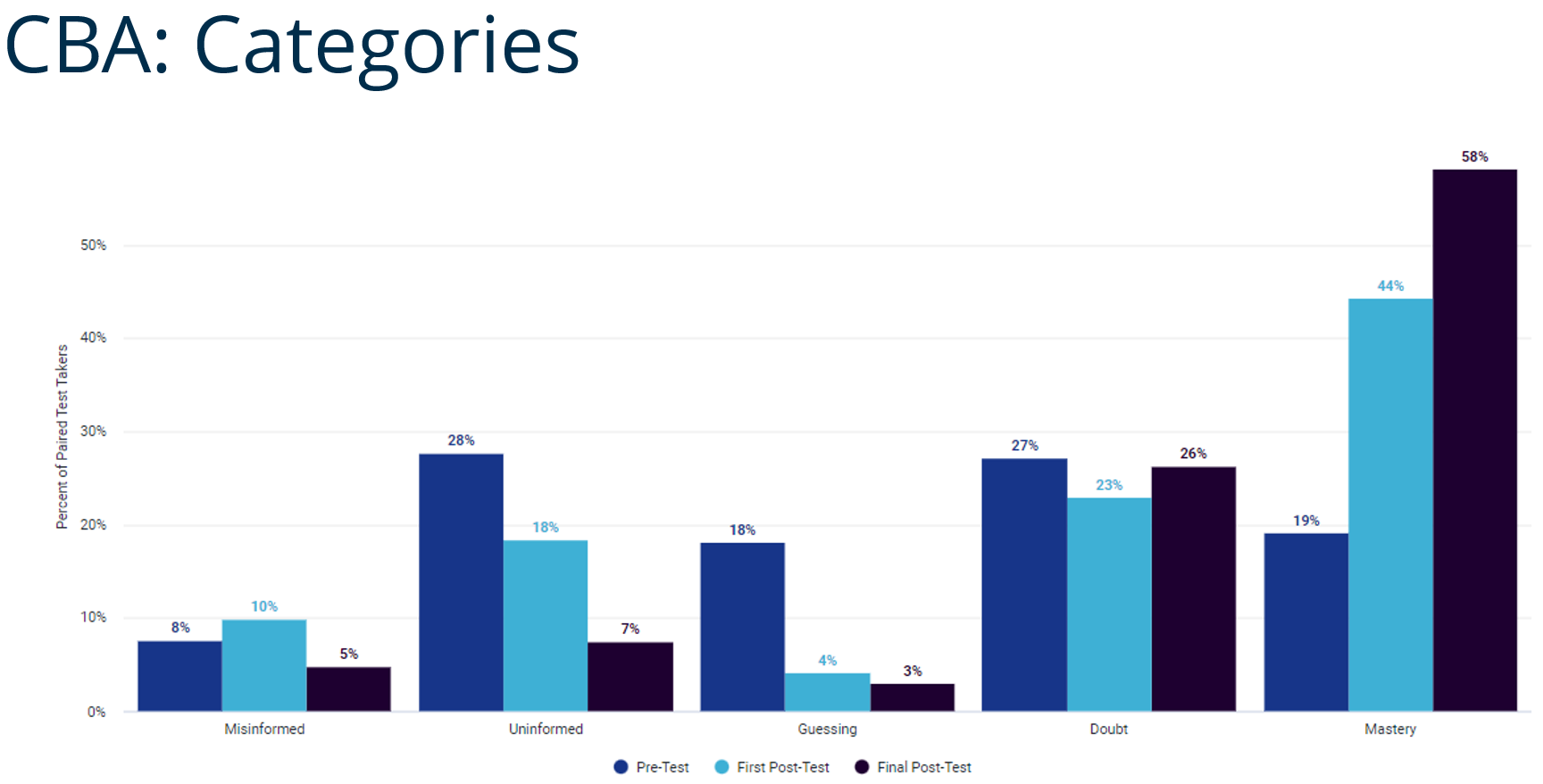

a) Dramatic Reductions in Guessing

Data generated from hundreds of thousands of CPD/CE learners demonstrated 18% of learners are guessing which answers are correcting in a pre-assessment. By designing truly effective CPD/CE interventions, that number fell to approximately 4% — an 83% reduction. This shows that CPD activities aren't just transferring information; they are making learners more certain about their knowledge — a vital predictor of durable learning and future application.

b) Significant Growth in Mastery

Data generated from these same learners and interventions demonstrated Mastery (correct and confident) increases by over 130% from pre- to post-assessment. As alluded to above, prior research has found that highly confident learners retained 91% of what they learned, while uncertain learners retained only 25% over time (Hunt 2003). Thus, when CPD/CE interventions foster (and measure) Mastery, they are critically bending the "forgetting curve" in their favor.

c) Identifying and Addressing the Misinformed

Interestingly, CBA also demonstrates that a small but material group will become more misinformed following CPD/CE. In the same data set we find that the percentage of misinformed learners rises from 8% to 10% immediately after educational interventions. Rather than seeing this as a failure, educators should see it as an opportunity for targeted remediation. These learners are at high risk for persistent performance errors, but with proper feedback and reflective interventions, they can recalibrate their understanding.

d) Minimal Sociologic Bias

Finally, concerns that CBA might introduce gender-based biases were investigated by analyzing top, traditional Anglo-Saxon male and female birth names (accepting the limitations). Results showed only 1% differences in confidence patterns — reassuring evidence that CBA can be broadly and equitably applied.

"Confidence is not arrogance — it's the difference between remembering and forgetting, between knowing and doing."

Why CBA Should Be the New Norm in CPD/CE

Traditional assessment methods — while serviceable — offer only a thin view of the learner's journey. They measure correctness but miss confidence and they misrepresent potential behavior change. CBA brings a richer, more predictive lens to CPD/CE evaluation. It supports agile program design, better segmentation, deeper needs analysis and stronger predictions of real-world behavior change. In a field where our ultimate goal is not just learning, but lasting improvement in patient care, CBA equips us with far sharper tools. With CBA, we don't just track answers; we track readiness for the real world.

"With CBA, we don't just track answers; we track readiness for the real world."

*This article was co-created from the transcript of my original presentation at #Alliance25 with the assistance of chatGPT 4.o.

Dr. McGowan has served in leadership positions in numerous medical educational organizations and commercial supporters and is a Fellow of the Alliance (FACEhp). He founded the Outcomes Standardization Project, launched and hosted the Alliance Podcast, and most recently launched and hosts the JCEHP Emerging Best Practices in CPD podcast. In 2012, he Co-Founded ArcheMedX, Inc, a healthcare informatics and e-learning company to apply his research in practice. You can follow him on X (formerly Twitter) (@BrianSMcGowan), connect with him on LinkedIn, or subscribe to his ReThink Learning Newsletter on Substack.