aMedscape, LLC, Director of Outcomes and Insights

bVanderbilt University School of Medicine

- Professor of Medical Education and Administration

Vanderbilt University Medical Center

- Director of Education, Division of Continuing Medical Education

- Director, Vanderbilt MOC Portfolio Program

- Co-Director of Evaluation, GOL2D Project to Reimagine Residency

Member, Vanderbilt Academy for Excellence in Education

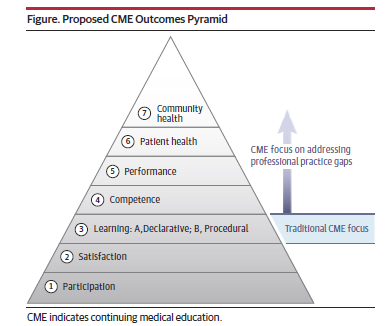

In 2009, Moore, Green and Gallis published a seminal article in outcomes measurement for continuing education for the health professions (CEHP).1 Many professionals in CEHP now know what Level 5 is versus Level 1 without batting an eye.

The article described a conceptual framework that expanded an earlier version to include instructional design as well as assessment and evaluation2; later, a pyramid was used to more clearly depict the framework,3 and more recently the pyramid was revised.4 See Figure 1 in the cited article.

Since the publication of the outcomes framework in 2009, there have been numerous outcomes presentations at Alliance meetings as well as journal articles that have been designed show that CEHP impacts knowledge, competence and confidence. We know from the work of Cervero and Gaines that CME can have a positive impact on knowledge, competence and performance and in some cases patient health.5 Until this year, the studies have been limited in sample size and scope. A recent publication by Lucero and Chen, however, describes an outcomes assessment for more than 4,000 oncologists in 57 online certified CHEP programs, demonstrating that we can confidently state that knowledge and competence are associated with changes in confidence, and confidence post-CEHP activity predicts commitment to change.6

Given this recent publication in the European Journal of CME’s Special Outcomes Issue, we spoke about what’s next for the outcomes pyramid. As we turn our attention to higher levels of the framework (Level 5- perfo

rmance change and Level 6 - improved patient health), it seems important to consider developing the framework into a validated theory of change. In other words, does assessment of clinician participants in CEHP activities show that what was learned about the content or the self as a result of that participation contribute to improvements in clinician performance and patient health status.

Fundamentally, the outcomes framework borrows the “if, then” format from the logic model.7, 8 The focus of a validation study would be to determine if there is evidence, for example, that shows that if a clinician participant participates in the learning activities at Level 4, then he or she is prepared to use the competence developed at Level 4 to provide the appropriate care to his or her patient at Level 5. In other words, what is the relationship among each of the levels of the outcomes framework? Does one have to demonstrate knowledge to move to developing competence in a specific area? This type of validity question should be asked at each transition between levels.

But there is more than that. Within each level, there are multiple variables along with possible modifiers and mediators that must be considered. What is the right combination? For example, in Moore’s articles and presentations, the importance of deliberate practice and expert feedback is emphasized, drawing heavily on Ericsson’s work. Is there evidence that supports that emphasis? Another focus of a validity study, therefore, is to determine if there is evidence to support the effectiveness of the combinations of variables (and their possible modifiers and mediators) that have been proposed for each level.

Beyond relationships among the levels and variables within the levels, there are questions to be answered related to how to validly assess each level and the variables within. For example, does a retrospective self-report question yield the same valid data as a measurement of assessment from pre-to-post-education? Does saying one’s competence improved validly capture a measurable improvement in competence?

It is an understatement to say that there will be a lot of work in a validity study of the outcomes framework.

Below are some specific areas of potential focus for each level of the outcomes pyramid:

Participation: The Outcomes Standardization Project (OSP) has defined what it means to be a learner and completer in a CEHP program. Does this fit all contexts and learning opportunities? It fits most standard online activities, but more should be fleshed out when it comes to implementation fidelity and what it means to “complete” essential components of an activity and how those essential components are defined and how they relate to other outcomes.

Satisfaction: How should this be collected? What are the most valid and reliable measures of satisfaction (ie, what is tied to knowledge, competence, confidence and other desired outcomes)? Is the Net Promoter Score a good way of capturing satisfaction?

Knowledge/Competence: Does knowledge impact confidence to the same extent as competence? Does one have to develop knowledge before competence? How are the two related? How do you validly and reliably measure knowledge and/or competence in ways that go beyond multiple-choice questions?

Confidence: Where does confidence fit in? We saw from the Lucero and Chen study that it comes between knowledge/competence and intention to change.6 Define further how to assess validly and reliably.

Intention to Change and Commitment to Change: Where do these fit in? How should they be assessed validly and reliably?

Performance: Define and validate further self-reported performance change vs. objective measure. Is there a difference?

Patient Health: Define and validate further self-reported patient health improvements vs. objective measure; also define for the field further that patient health is not the patient receiving a new treatment, it’s what results after that treatment.

Community/Public Health: What are best practices to reaching outcomes and assessing outcomes at this level? What are reliable and valid data sources for assessing this level?

We hope that these points will spark more research in validating a conceptual framework that has guided our field for over a decade, which will ultimately result in a theory of change for CME and its impact on desired outcomes.

References

- Moore DE, Jr., Green JS, Gallis HA. Achieving desired results and improved outcomes by integrating planning and assessment throughout a learning activity. J Cont Educ Health Prof. 2009;29(1):5-18.

- Moore DE, Jr. A framework for outcomes evaluation in the continuing professional development of physicians. In: Davis D, Barnes BE, Fox R, eds. The continuing professional development of physicians:From research to practice. American Medical Association Press; 2003:249-274.

- Moore DE, Jr. The Value Proposition for CME: The Importance of an Outcomes-Based Approach. Global Alliance for CME Annual Meeting. Munich, Germany; 2011.

- Stevenson R, Moore DE, Jr. Ascent to the Summit of the CME Pyramid. JAMA. 2018;319(6):543-544.

- Cervero RM, Gaines JK. The impact of CME on physician performance and patient health outcomes: an updated synthesis of systematic reviews. J Contin Educ Health Prof. 2015;35(2):131-8. doi:10.1002/chp.21290

- Lucero KS, Chen P. What Do Reinforcement and Confidence Have to Do with It? A Systematic Pathway Analysis of Knowledge, Competence, Confidence, and Intention to Change. Journal of European CME. 2020;9(1, 1834759)doi:10.1080/21614083.2020.1834759

- W. K. Kellogg Foundation. Logic Model Development Guide. W.K.Kellogg Foundation; 2004.

- Knowlton L, Phillips C. The Logic Model Guidebook, Better Strategies for Better Results. Sage; 2009.