Continuing education (CE) and continuing professional development (CPD) have long relied on Moore’s outcomes framework to demonstrate value, from participation and satisfaction to learning, competence, performance and ultimately patient and community health.1,2 While foundational, that linear ladder often fails to capture the complex, iterative and context-dependent pathways by which education catalyzes change in real clinical environments. This article proposes a next-generation approach, the CIRCLE Model (Continuous, Integrated, Responsive, Contextual, Learning, Evaluation), represented as a circle to emphasize continuous improvement.

CIRCLE adds explicit attention to engagement depth, affect and motivation, contextual forces, sustainability, and equity, while retaining rigorous measurement of practice and patient impact. We outline the model’s six domains, recommend metrics and data sources, analytic designs suited to nonlinear improvement, and a practical vignette. The goal is not to discard Moore et al.’s contributions but to engender a conversation that will lead to an evolution of outcomes evaluation so it better matches how actual change happens in healthcare.

Why a New Model Now?

CE/CPD programs increasingly operate within learning health systems where quality improvement, digital tools and interprofessional teams interact dynamically. Clinicians learn asynchronously, in teams, across formats and amid organizational constraints they do not control. The causal pathway from “education” to “better outcomes” is therefore rarely a staircase/pyramid; it is a loop of signals, trials, feedback and course correction. Programs are also expected to demonstrate not just knowledge gains but measurable changes in behavior and health, sustained over time, with attention to equity and patient experience.

Moore’s framework has been invaluable for establishing a shared language and promoting evaluation beyond attendance and satisfaction. Its challenge is that it implies a progression in which each step depends on the former, and it places little emphasis on contextual enablers, affective drivers (sentimentality, confidence, motivation), or durability of change. Program leaders often find themselves collecting excellent “Levels 1-4” data and piecemeal performance indicators that indicate a snapshot in time without a coherent story about why change did or did not occur, or what to do next. CIRCLE has been designed to close that gap.

The CIRCLE Model at a Glance

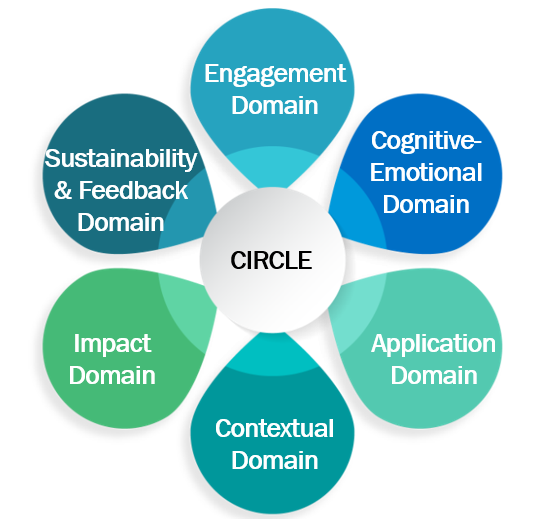

The CIRCLE Model organizes outcomes across six interrelated domains, intentionally nonhierarchical, with learning and change looping continuously:

- Engagement Domain — Depth and mode of participation (active vs. passive; team-based; longitudinal microlearning).

- Cognitive-Emotional Domain — Knowledge, reasoning, self-efficacy, motivation and intent to change.

- Application Domain — Attempts to apply learning, peer feedback and process measures of practice change.

- Contextual Domain — Organizational readiness, workflows, resources, policy and culture that enable or impede change.

- Impact Domain — Patient outcomes, patient-reported outcomes (PROs), caregiver experience, equity and guideline-concordant care.

- Sustainability & Feedback Domain — Durability of gains, spread/scale to new units and closed-loop learning back to design.

Visually, the model is depicted as a circle (see Figure 1) to emphasize that evidence can enter at any point, inform the others and return to improve program design. For example, a negative impact signal may point you back to contextual barriers; a strong engagement signal with weak application may prompt team-based practice coaching.

How CIRCLE Compares With Moore’s Framework

- Shared foundation: Moore’s framework remains foundational for establishing common language and pushing evaluation beyond attendance/satisfaction. CIRCLE is an evolution, not a revolution.

- Linear vs. iterative: CIRCLE abandons the stepwise ladder and reflects the real-world, nonlinear nature of clinical change. Evidence at any domain can explain variance at anothere.g., low self-efficacy suppressing practice change despite high knowledge).

- Context as a first-class construct: Rather than treating the environment as background noise, CIRCLE measures it intentionally. Context often explains why performance shifts stall.

- Affective science: Sentiment, confidence, motivation, intention-to-change and psychological drivers fill a long-standing gap, factors well known to affect behavior. We measure them alongside knowledge.

- Sustainability and spread: Time is explicit. The model tests whether change endures and scales beyond early adopters.

- Human-centered outcomes: PROs, patient voice, caregiver experience and tracking disparity-sensitive metrics are embedded in the Impact Domain, not optional add-ons.

- Practical measurement guidance: The suggested signals, data sources and pitfalls by domain provide implementable guidance.

With this comparison in place, we now shift from concept to practice. The next section proposes the operationalization of each CIRCLE domain to aid the implementation and evaluation of the model by laying out concrete signals, potential data sources, and common pitfalls.

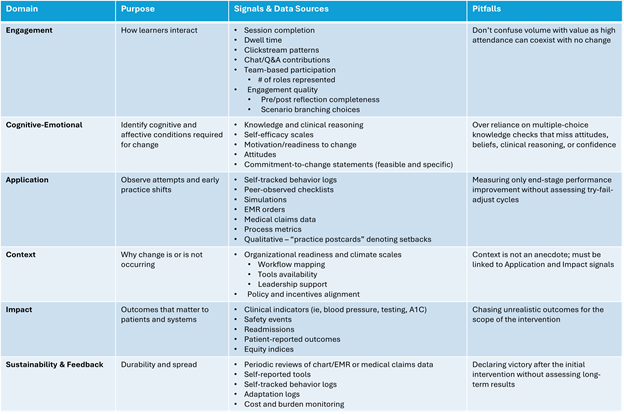

Measurement Guidance by Domain

- Engagement

Purpose: Characterize how learners interacted with the intervention.

Signals & Data Sources:

- Session completion, dwell time, clickstream patterns, chat/Q&A contributions.

- Team-based participation (e.g., number of roles per team attending).

- Engagement quality: pre/post reflection completeness; scenario branching choices.

Pitfalls: Avoid confusing volume with value; high attendance can coexist with no change.

- Cognitive-Emotional

Purpose: Surface the cognitive and affective conditions required for change.

Signals & Data Sources:

- Knowledge and clinical reasoning (script concordance, case-based questions).

- Self-efficacy scales (confidence to perform specific behaviors), motivation/readiness to change, and attitudes toward guidelines.

- Commitment-to-change statements structured with specificity and feasibility ratings.

Pitfalls: Over-reliance on multiple-choice knowledge checks that miss diagnostic reasoning or confidence.

- Application

Purpose: Observe attempts and early practice shifts — successes and friction.

Signals & Data Sources:

- Self-tracked behavior logs; peer observation checklists; simulation or OSCE performance; mini-audits of EMR orders, screenings or counseling.

- Process metrics (e.g., time-to-antibiotic, guideline fields completed).

- Qualitative evidence: brief “practice postcards” describing where attempts failed.

Pitfalls: Measuring only end-state performance without understanding the try-fail-adjust cycle.

- Context

Purpose: Explain why change is not occurring

Signals & Data Sources:

- Organizational readiness and implementation climate scales; workflow mapping; tool availability (order sets, prompts); leadership support

- Policy and incentive alignment; staffing ratios; competing initiatives.

Pitfalls: Treating context as anecdote. Use short, repeatable instruments and link them to Application and Impact signals.

- Impact

Purpose: Demonstrate outcomes that matter to patients and systems.

Signals & Data Sources:

- Clinical indicators (A1c, BP control, appropriate imaging), safety events, readmissions.

- Patient-reported outcomes and experience (shared decision-making, activation, symptom relief).

- Equity indices (gap closure across language, race/ethnicity, geography).

Pitfalls: Chasing distal outcomes too soon; adjust timing and scope to the intervention’s plausible influence.

- Sustainability & Feedback

Purpose: Determine durability and spread; feed learning back into design.

Signals & Data Sources:

- Run charts over months/quarters; maintenance of change after education ends.

- Adaptation logs (what teams changed as they scaled); policy or pathway adoption.

- Cost and burden monitoring to ensure improvements are feasible over time.

Pitfalls: Declaring victory after a single post-test; always inspect long tails.

Analytic Approaches That Fit a Circle, not a Ladder

With the domain-level measures in place, the next step is choosing analyses that honor CIRCLE’s non-linear, iterative character. That methods that follow are included because they preserve temporal dynamics, account for team/site clustering, and make explicit how context and cognition drive impact.

- Interrupted time series (ITS) and run charts to visualize pre/post trends while respecting seasonality and autocorrelation.

- Difference-in-difference (DiD) when you have a comparable unit not exposed to the education.

- Mixed-effects models to account for clustering (clinicians within teams within sites).

- Mediation and moderation analyses to test how context and cognitive-emotional signals explain application and impact.

- Qual/quant integration where qualitative “practice postcards” identify barriers that are then quantified across sites.

A Brief Vignette: Antimicrobial Stewardship in the Emergency Department (ED)

The following represents a hypothetical implementation vignette to showcase the potential real-world application of the CIRCLE model. To illustrate how CIRCLE operates end-to-end, we trace a single stewardship effort through each domain as a continuous narrative across all domains.

Our Example:

An academic medical center has used the CIRCLE model to support appropriate antibiotic prescribing for uncomplicated bronchitis within their Emergency Department.

- Engagement Domain: An academic medical center launches a microlearning plus two team huddles; 86% of ED providers participate, and clickstream data shows high interaction with branched cases establishing shared mental models across staffing shifts. This engaged start sets the condition for gains in knowledge and confidence that follow.

- Cognitive-Emotional Domain: Case‑based questions improve clinical reasoning and self‑efficacy to explain no‑antibiotic decisions rises from 54% to 78%; 62% of clinicians commit to using a new discharge handout. These shifts in understanding and confidence prime early behavior change at the point of care..

- Application Domain: Mini‑audits of 100 charts/month show a drop in non‑indicated prescribing from 41% to 24%, though weekend variation suggests fragile uptake. The uneven pattern points to environmental barriers that require attention before impact can stabilize.

- Context Domain: Weekend staffing and limited access to printers surface as the main impediments; leadership adds digital handout links and pharmacist consult hours. By removing these obstacles, the team enables more reliable application of the intended behaviors.

- Impact Domain: Within 8 weeks, inappropriate prescribing falls to 15%; patient callbacks for “not getting antibiotics” decline; satisfaction scores hold steady. With early benefits evident, the focus shifts from short‑term gains to durability and spread.

- Sustainability & Feedback: At three months, rates stabilize at 13%; the playbook spreads to urgent care, and a bilingual handout is added based on patient input. These adaptations close the loop and feed forward into the next cycle of improvement.

In the hypothetical example shared, The CIRCLE design would help leaders tell the story. Initial gains were driven by confidence and intent. Sustaining gains required fixing workflow and resource gaps. Without the Context and Sustainability Domains, the program might have declared victory too soon, or missed the barrier entirely.

CIRCLE: A Clear, Honest Model

CE/CPD strives to change practice in living systems not in controlled environments. That reality calls for an evaluation approach that treats context, motivation, trial-and-error, and time as integral, not incidental, to outcomes. The CIRCLE model reframes evaluation as a continuous loop, connecting engagement, mindsets, application, context, impact and sustainability. It preserves the rigor of outcomes measurement while adding the practical insight educators need to improve programs and the care patients receive.

Whether you choose to adopt some form of this framework or use it to augment existing reports, CIRCLE ultimate intent is to engender a conversation within the CE/CPD community that will lead to the development of eventual models that offers a clearer, more honest story about how education translates to better patient better care, and how it can keep improving over time.. I welcome dialogue and collaboration to further evaluate the CIRCLE or other frameworks and invite interested readers to contact me at wmencia@glc.healthcare to join the conversation.

Table 1:

References

- Moore DE, Jr., Green JS, Gallis HA. Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009;29(1):1-15.

- Moore DE, Jr., Chappel K, Sherman L, Vinayaga-Pavan M. A conceptual framework for planning and assessing learning in continuing education activities designed for clinicians in one profession and/or clinical teams. Med Teach. 2018;40(9):904-913.

William Mencia, MD, FACHEP, is a physician by training having received his medical doctorate in 1997 and has spent the last 27 years in CPD. After joining GLC in 2019, he has held several roles from developing the scientific affairs and the clinical strategy teams, to currently applying his passion for outcomes and instructional design toward improving patient lives. He is an Alliance Fellow, past chair of the Alliance Annual Conference, past Alliance and ACCME volunteer and has been published numerous times. Outside of work, William enjoys traveling, bicycling, pickleball, beach and quiet evenings at home.

Join the discussion on the Alliance Online Community.